Technological advancements in medicine can benefit patients and clinicians but new approaches can also mean new risks. Dr Helen Hartley, Head of Underwriting Policy at Medical Protection, discusses where the liability lies for artificial intelligence

The concept of artificial intelligence (AI) may lead some to imagine robots running amok in a bid to destroy humanity, while others may wonder whether their livelihoods could be threatened by AI advancements.

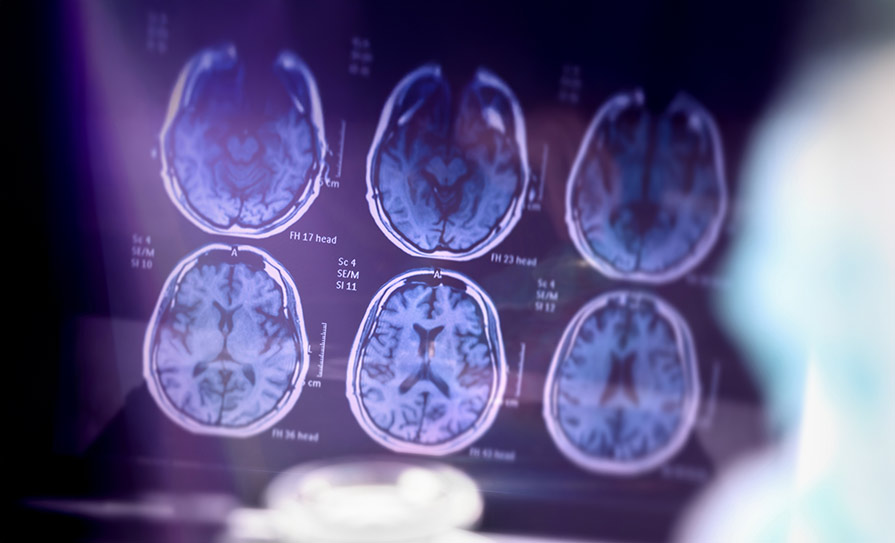

Radiologists are perhaps at the forefront of medical innovation, primarily in medical imaging. Publications on AI in radiology have increased over 10 years from 100-150, to 700-800 publications per year.

Recently, a study in the journal Nature Medicine reported on an algorithm that can learn to read complex eye scans. When tested, it performed as well as two of the world’s leading retina specialists and did not miss a single urgent case.

Though the benefits of AI in medicine are impossible to ignore, AI is not infallible. When mistakes occur, where does liability lie?

Making AI work for you

Clinicians should ensure any robot or algorithm is used as an aid to their clinical judgment and proficiency, rather than having AI tools replacing all human input in clinical decisions. For instance, ‘symptom-checkers’ use computerised algorithms that ask users to input details about their symptoms. While they facilitate self-diagnosis by providing a range of diagnoses that might fit the user’s symptoms, symptom-checkers used as part of medical triage advise the patient whether to seek care, from where they should seek it and with what level of urgency.

Algorithm-based systems, such as symptom-checkers, should not be followed blindly without regard to a patient’s particular clinical features, which may impact on the probability of certain diagnoses — for example, past history, concurrent drug use or circumstances, such as occupation or geographical location.

Telemedicine via remote consultations is gaining traction with some due to its perceived convenience. There are inherent limitations in telemedicine in relation to the potential difficulty in spotting non-verbal clues and the inability to examine the patient. There are circumstances in which the clinician will wish to bring a patient in for a face-to-face consultation or direct them to do so with another clinician.

The creators and/or producers of these innovations require independent advice regarding their legal and regulatory obligations and indemnity requirements, which may include the potential for multiple serial claims to arise from errors or service disruption affecting an AI product. Similarly, with regard to the use of any surgical equipment, product liability would apply in relation to device or robot malfunction, whether hardware or software.

In order to minimise the risk of malfunction or errors, any clinician intending to rely on AI equipment should ensure they are satisfied that it is in good working order, that it has been maintained and serviced according to the manufacturer’s instructions, and that product liability indemnity arrangements are in place.

Doctors should also:

Obtain and document having taken informed consent from patients including, where relevant, the benefits and risks of using AI equipment and of other available treatment options.

Verify the patient’s identity and jurisdictional location when undertaking any form of online consultation. The latter is important to avoid inadvertent breaches of legal or regulatory obligations or invalidation of indemnity or insurance protection.

Consider the safety of proceeding with an online consultation 1) if it would not be easy to arrange to see the patient in person for an examination, if that was indicated; or 2) if the clinician perceives that lack of access to the patient’s medical records, including drug and allergy history, is a barrier to delivering safe care.

Ensure adequate documentation of the online consultation and consider the need to communicate — with appropriate consent — with any other relevant healthcare professionals involved in looking after the patient.

Adhere to any local checklists before ‘on-the-day’ use.

Only use equipment on which they have received adequate training and instruction.

Consider the possibility of equipment malfunction, including whether they have the skills to proceed with the procedure regardless, and ensure the potential availability of any additional equipment or resources required in that event.

References available on request

Case study

A GP contacted Medical Protection about hosting an online symptom-checker — commissioned from a private company — on the practice website. The service takes patients through an algorithm via questions, where the patient may be directed to call an ambulance, the community pharmacy or the GP at the end of the short questionnaire.

Medico-legal advice

While symptom-checking algorithms are becoming increasingly sophisticated, it remains possible that subtle physical signs may be missed if no clinical examination takes place. The triage algorithm might not pick up on subtle signs of serious illness requiring urgent attention. To mitigate that risk, doctors should have a low threshold for recommending that the patient attends a face-to-face appointment with a clinician so they may undergo detailed clinical assessment.

Another matter to consider is how quick the symptom-checker provides a response. Some online services provide immediate guidance to the patient, while some responses are slower. The GP should explore what options are available within their practice to review patient contacts via the online service, especially at weekends, to see whether the risk could be managed within available resources.

While online symptom-checkers hosted on practice websites may have more value than a patient performing self-diagnosis via ‘Dr Google’, all symptom-checkers are dependent upon input of information by the patient, which is less reliable than triage and assessment by a qualified professional if the patient disregards the significance of a particular symptom or is unaware of an important clinical sign.

Therefore, while symptom-checkers may be a useful adjunct, they are not recommended as a substitute for triage. Medical Protection does not support claims relating to the accuracy or reliability of symptom-checkers, as this amounts to product liability which Medical Protection does not indemnify.

However, members may seek assistance should they face criticism of their clinical judgement relating to their interpretation of the output of an algorithm.

Leave a Reply

You must be logged in to post a comment.